What Happens When We Outsource Our Thinking?

AI fixed my bug in minutes, but when asked to explain the solution, my mind went blank. The real bug? I’d outsourced not just the work, but the understanding—and suddenly, my code didn’t feel like mine at all. What are we losing when AI does our thinking for us?

Last week, I spent three days trying to debug a particularly nasty edge case in my backend system. After hours of frustration, I did what many developers now do: I copied the error message into Claude and asked for help. Within minutes, I had a solution. Relief washed over me as I implemented the fix, committed the code, and closed my laptop.

But something strange happened the next morning. When my teammate asked me to explain the fix, I couldn't articulate what had actually caused the bug. I remembered copying the solution, but the underlying logic had never been processed by my own brain. I had outsourced not just the work, but the understanding.

This experience took on new meaning when I stumbled across a fascinating research paper examining the neural and behavioral impacts of using LLMs versus traditional search engines versus "going it alone" for essay writing tasks. The findings should give all of us in the software industry serious pause.

The Research: What Happens to Our Brains When We Use AI

The study tracked 54 participants across four months as they wrote essays under three conditions: using ChatGPT (the "LLM group"), using web search, or using no tools at all (the "Brain-only group"). Researchers measured brain activity with EEG and assessed various outcomes like memory retention and perceived ownership of the work.

The results? Striking differences in neural connectivity patterns that tell a worrying story about what happens when we offload our thinking:

- Brain connectivity scaled inversely with external assistance: The Brain-only group showed the strongest neural engagement, with the LLM group showing up to 55% reduced connectivity compared to those who wrote without tools.

- Memory encoding suffered dramatically: A staggering 83% of LLM users couldn't quote their own essays immediately after writing them, and none could quote correctly. This persisted across sessions, with 33% still unable to quote correctly by the third session.

- Sense of ownership diminished: Brain-only participants unanimously claimed full ownership of their work, while LLM users showed "fragmented and conflicted" authorship - often claiming partial credit (50-90%) or explicitly denying ownership entirely.

When I read these findings, I immediately saw the parallel to what was happening in my own coding practice. The fixes I implemented after consulting an LLM never quite felt like "my code" - and worse, the understanding never quite made it into my long-term memory.

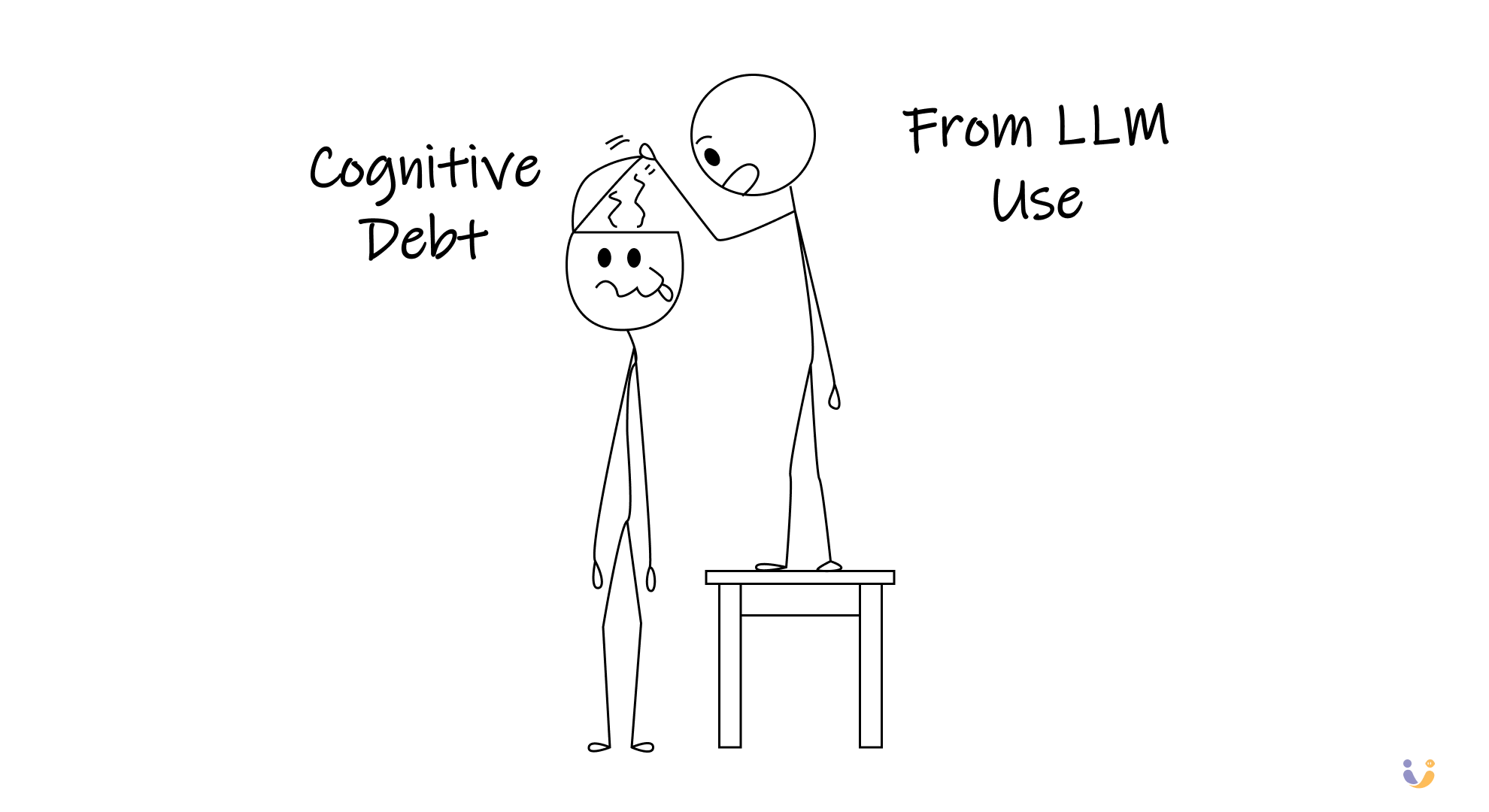

The Developer's Cognitive Debt

While this study focused on essay writing, the implications for software development are profound. Consider these parallels:

- Reduced neural engagement during problem-solving: When we paste errors into ChatGPT without attempting to debug first, we're likely experiencing the same reduced neural connectivity. We're getting solutions without building the mental models that make us better engineers.

- Impaired memory of implementation details: How often have you implemented an LLM-suggested solution, only to be unable to explain it to a colleague the next day? This mirrors the study's finding about quote retention.

- Fragmented ownership of code: The study found LLM users felt diminished ownership of their writing. In code reviews, I've noticed engineers who heavily used AI assistance often struggle to defend implementation decisions - because they didn't really make those decisions.

- Narrower conceptual exploration: The study found LLM users explored a narrower set of ideas across sessions, suggesting decreased critical engagement. In coding, this might manifest as implementing the first solution offered rather than considering multiple approaches.

- Skill atrophy over time: Perhaps most concerning was the finding that when LLM-dependent participants were suddenly asked to work without tools, they underperformed significantly compared to those who had been working without assistance all along.

One developer friend described it perfectly: "Using GPT for coding feels like taking a helicopter to the top of a mountain instead of hiking. You get there faster, but you miss the terrain knowledge you'd need if you ever had to find your way back on foot."

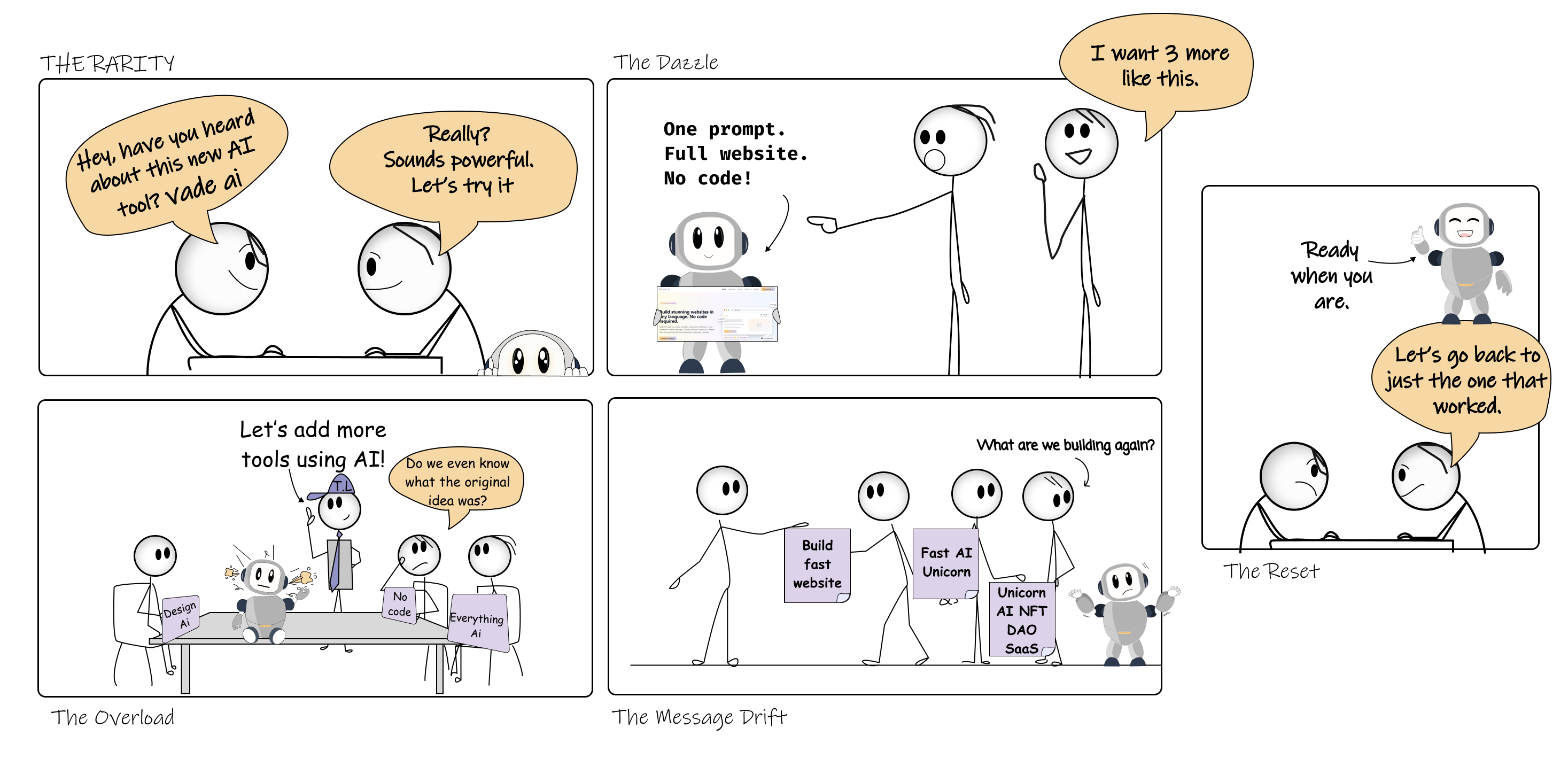

Beyond Individual Impact: The Communication Cascade

When we use LLMs to inflate simple messages ("Meet at 5PM" becomes three paragraphs) or condense complex ones, we're creating a new form of communication debt.

Imagine this scenario in engineering:

- A PM asks GPT to expand a simple feature request into detailed requirements

- An engineer asks GPT to summarize those requirements

- The engineer asks GPT to generate code based on their understanding

- A reviewer asks GPT to explain what that code does

- The PM asks GPT to verify if the implementation matches the original intent

At each step, something gets lost in translation. The neural disengagement found in the study compounds across the entire process. Everyone feels productive, but no one deeply understands what's happening.

Your observation about virtual avatars attending meetings takes this to its logical extreme. We'd have machines talking to machines, with humans as mere intermediaries who don't fully process or retain the information. As you said, we'd burn tremendous energy to have machines communicate in human language... with other machines.

Finding a Better Balance

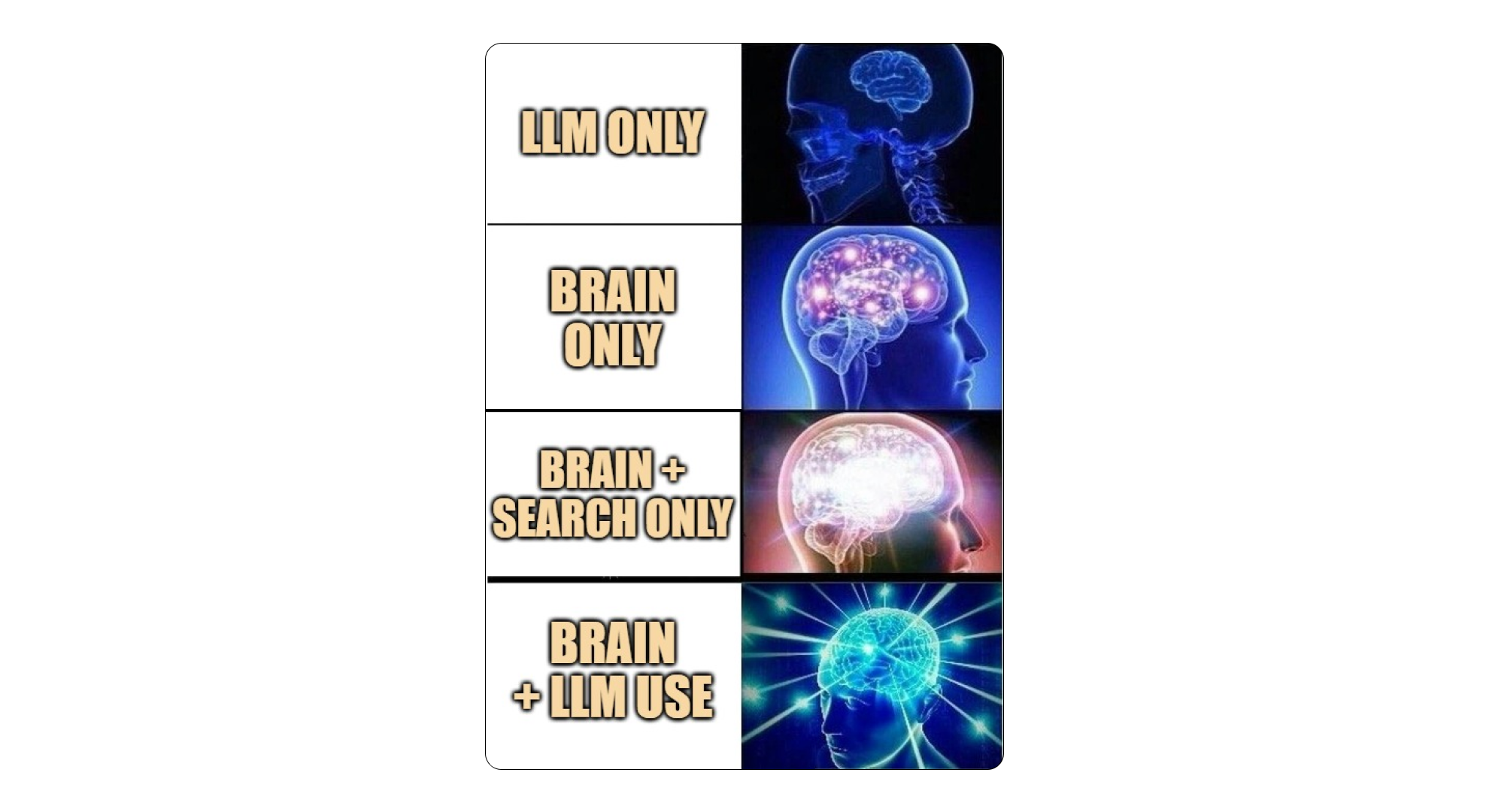

The research offers one fascinating ray of hope: participants who initially worked without tools and later gained access to LLMs showed significantly better outcomes than those who relied on LLMs from the start.

The Brain-to-LLM group maintained stronger neural connectivity, better memory retention, and higher performance even when tools were introduced. This suggests that building cognitive foundations first, then adding AI assistance, might be the optimal path.

For developers, this could mean:

- Attempting to solve problems manually before reaching for AI assistance

- Using AI primarily for tasks where you've already developed strong mental models

- Implementing deliberate practice of core skills without AI assistance

- Explaining AI-generated solutions in your own words before implementing them

I've started a personal practice of writing out my understanding of any AI-generated code before committing it. This simple step forces me to process the solution rather than just copying it.

The Energy Cost Angle

The study also notes a sobering environmental reality: LLM queries consume approximately 10 times more energy than search queries. When we're having AIs talk to AIs, as in your communication cascade example, we're multiplying this cost for questionable benefit.

In an industry increasingly concerned with green computing, we should consider not just the cognitive costs of AI assistance but the material and environmental ones as well.

A Middle Path Forward

I don't think the answer is abandoning AI coding assistants entirely. They're powerful tools that can help us navigate unfamiliar libraries, generate boilerplate, and sometimes spot bugs we've gone blind to. But this research suggests we need to be much more intentional about when and how we use them.

Perhaps the ideal approach is to build strong foundations through unassisted practice, then strategically deploy AI for specific tasks while maintaining our cognitive engagement throughout the process.

What I'm certain of is that if we don't think carefully about these questions, we risk raising a generation of developers who can prompt an AI but can't reason through a problem when the AI fails them. And in those critical moments when systems break in unexpected ways, that's exactly the skill we'll need most.

References

[1] Kosmyna, N., Hauptmann, E., Yuan, Y.T., Situ, J., Liao, X-H., Beresnitzky, A.V., Braunstein, I., & Maes, P. (2025). Your Brain on ChatGPT: Accumulation of Cognitive Debt when Using an AI Assistant for Essay Writing Task. arXiv preprint arXiv:2506.08872. https://arxiv.org/abs/2506.08872