How We Stole Performance Back from PostgreSQL

Our 4MB JSON blob nearly brought PostgreSQL to its knees—until we ditched recursive queries and embraced client-side tree building. What started as a performance nightmare became a lesson in keeping things simple, and our UI now loads faster than ever. Sometimes, less is truly more.

How a 4MB JSON nightmare and a recursive query disaster led us to discover the joy of client-side tree construction

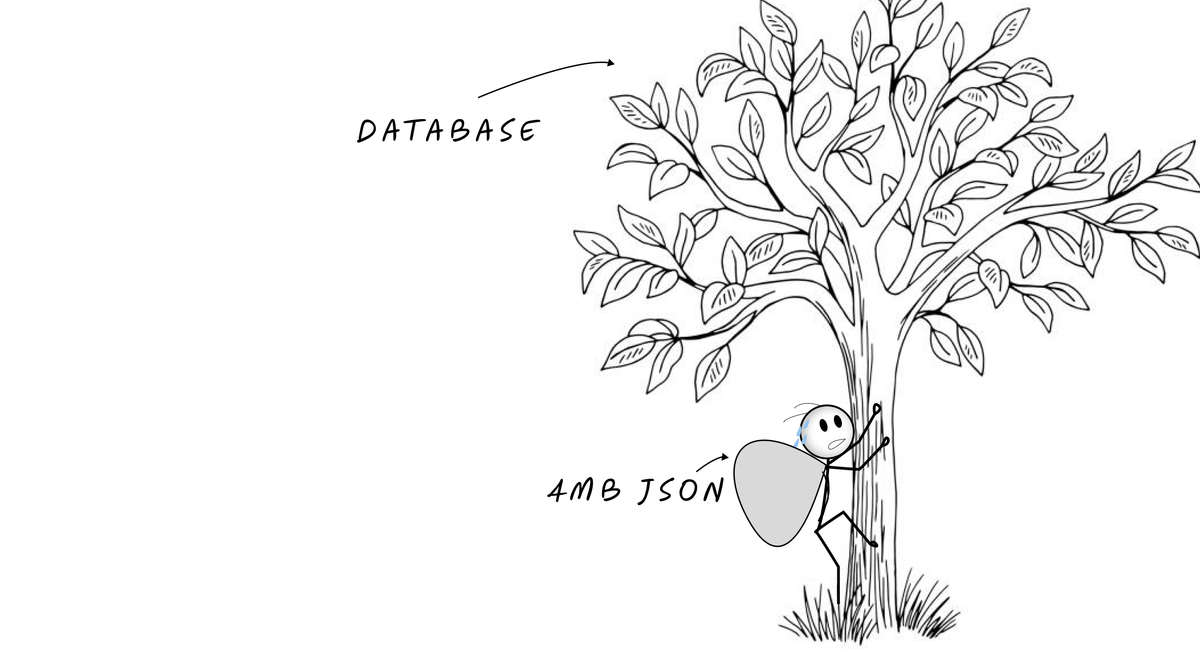

The JSON Monster That Ate Our Database

Let me tell you about the day our UI tree ate our database. Not literally, of course—databases don't have digestive systems—but watching our PostgreSQL instance choke on 4MB JSON blobs felt pretty damn close to indigestion.

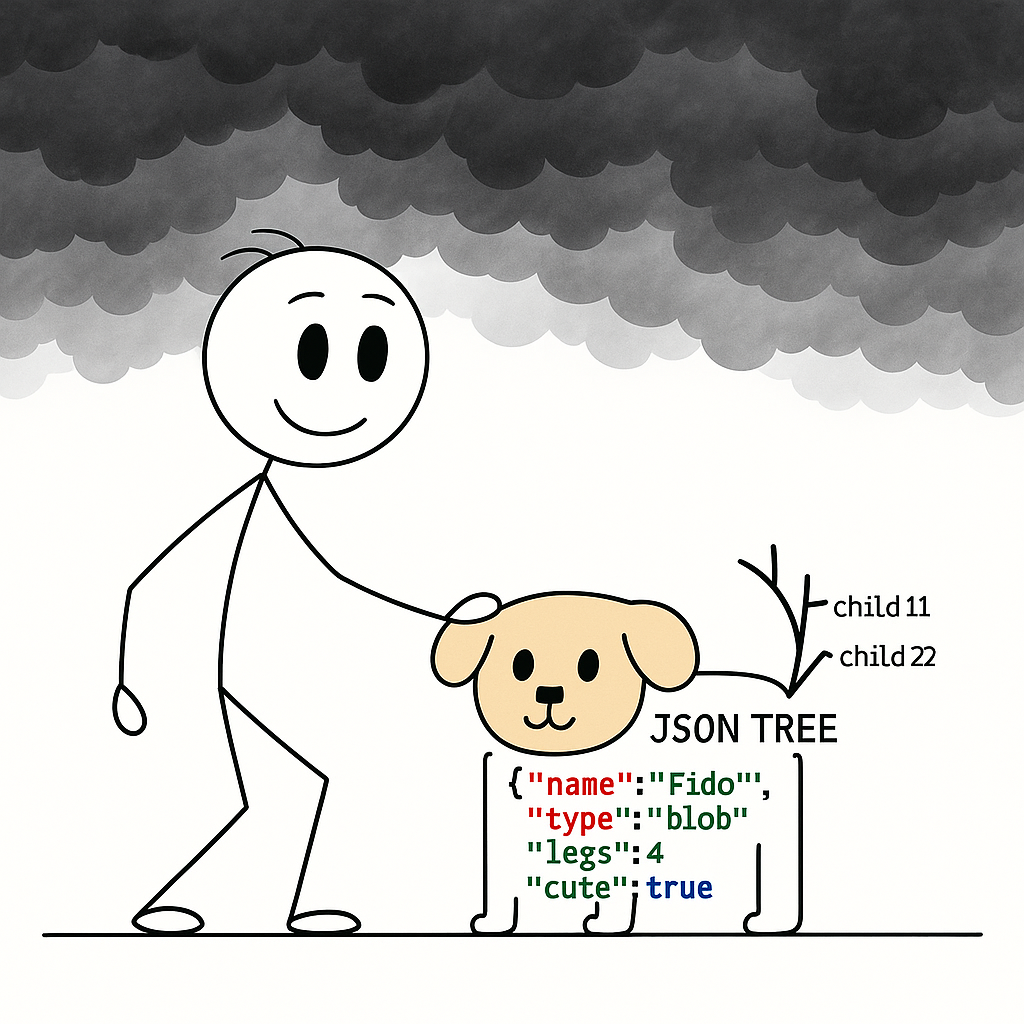

We were building Vade AI, a visual UI builder where everything is a node. Think of it like a family tree, but instead of relatives, you have UI components, and instead of awkward dinner conversations, you have recursive data structures that grow faster than bamboo in a rainstorm.

Initially, our approach was beautifully naive. We stored the entire UI tree as a single JSONB document in our workspace table:

CREATE TABLE vai.workspace (

id uuid NOT NULL DEFAULT gen_random_uuid(),

org_id uuid,

app_id uuid,

title text,

tree jsonb -- This little field became our nemesis

);

The logic seemed sound: one query, one JSON blob, instant UI tree. What could go wrong?

Everything. Everything could go wrong.

As users built more complex interfaces, our JSON trees started resembling the Amazon rainforest—sprawling, dense, and impossible to navigate efficiently. A typical tree looked something like this:

{:componentName "Root"

:props {:title "My Amazing App"}

:children [{:componentName "Header"

:props {:backgroundColor "#ff0000"}

:children [{:componentName "Logo" ...}

{:componentName "Navigation"

:children [...]}]}

{:componentName "MainContent"

:children [{:componentName "Sidebar"

:children [...]}

{:componentName "ContentArea"

:children [...]}]}]}

Multiply this by hundreds of nested components, and you get JSON documents that would make a tax lawyer weep. Our largest tree hit 4.2 megabytes of pure, concentrated hierarchical chaos.

The Performance Cliff That Knocked Us Flat

PostgreSQL applies TOAST (The Oversized-Attribute Storage Technique) compression to JSONB documents exceeding 2KB, storing them outside the main table in a TOAST table. When your JSON hits the multi-megabyte range, you're not just reading data—you're performing archaeological excavations.

Every time someone loaded a workspace, PostgreSQL had to:

- Fetch the TOASTed data from separate storage

- Decompress the entire 4MB blob

- Parse the JSONB structure

- Send it over the wire to our ClojureScript frontend

The result? Loading times that made dial-up internet look speedy. Users were reporting 15-30 second load times for complex interfaces. That's enough time to make coffee, question your life choices, and consider a career in farming.

The Great Normalization Migration

Faced with performance numbers that would embarrass a Windows Vista startup sequence, we decided to do what database textbooks have been screaming about for decades: normalize our data.

Out went the monolithic JSON blob. In came a proper relational structure:

-- The main node table

CREATE TABLE vai.node (

id uuid NOT NULL DEFAULT gen_random_uuid(),

org_id uuid,

app_id uuid,

workspace_id uuid,

root_id uuid,

parent_id uuid,

component_name text NOT NULL,

source_name text DEFAULT '',

props jsonb NOT NULL DEFAULT '{}',

hidden boolean NOT NULL DEFAULT false,

deleted boolean NOT NULL DEFAULT false

);

-- The parent-child relationships

CREATE TABLE vai.node_children (

index integer NOT NULL,

node uuid NOT NULL,

children uuid NOT NULL,

CONSTRAINT node_children_pkey PRIMARY KEY (node, children)

);

This felt like progress. Instead of one massive JSON document, we had proper foreign keys, indexable columns, and the ability to use primary and foreign keys, and constraints to enforce data integrity.

Our Clojure data model looked cleaner too:

(def entity

(model-spec/validate-entity!

{:componentName :Entity

:props {:original-entity :node

:attributes [{:original-key :id

:original-type :uuid

:primary-key true}

{:original-key :parent-id

:alias-key :parentId

:original-type :uuid

:optional true}

{:original-key :component-name

:alias-key :componentName

:original-type :string}

{:original-key :children

:original-type :ref

:target :node/id

:cardinality :many}]}}))

But normalization brought its own demon: how do you reconstruct a tree from normalized data?

Enter the Recursive CTE Dragon

Like every developer who's read a PostgreSQL manual, I thought I had the perfect solution: recursive Common Table Expressions (CTEs). These bad boys are designed exactly for traversing hierarchical data structures.

My first attempt looked something like this:

WITH RECURSIVE tree_builder AS (

-- Base case: find the root nodes

SELECT id, parent_id, component_name, props, 0 as depth,

ARRAY[id] as path

FROM vai.node

WHERE workspace_id = $1 AND parent_id IS NULL

UNION ALL

-- Recursive case: find children

SELECT n.id, n.parent_id, n.component_name, n.props,

tb.depth + 1, tb.path || n.id

FROM vai.node n

INNER JOIN tree_builder tb ON n.parent_id = tb.id

INNER JOIN vai.node_children nc ON nc.node = tb.id

AND nc.children = n.id

)

SELECT * FROM tree_builder ORDER BY depth, path;

This query was supposed to be my victory lap. Recursive queries are typically used to deal with hierarchical or tree-structured data, and PostgreSQL's recursive CTEs are specifically designed for this use case.

The Recursive Query Performance Apocalypse

The recursive CTE worked. Technically. It reconstructed our tree structure perfectly, maintaining parent-child relationships and ordering everything correctly.

It was also slower than continental drift.

What should have been millisecond queries were taking 8-15 seconds for moderately complex trees. For our largest workspaces, query times approached 30 seconds. Users were experiencing what felt like database timeouts on every page load.

The problem wasn't just the recursion—it was what PostgreSQL had to do during recursion. Each "fan-in" multiplies the number of CTE rows as the "path" column differs. Our tree structure, with its multiple levels and complex parent-child relationships, was creating an exponential explosion of intermediate results.

Here's what was happening under the hood:

- Materialization Nightmare: WITH provides a way to write auxiliary statements for use in a larger query, but recursive CTEs in PostgreSQL are always materialized. Every iteration creates a working table that grows larger with each level of recursion.

- Join Explosion: Each recursive step required joins between the current result set and the node table. With deep trees, this meant joining against progressively larger intermediate tables.

- Path Tracking Overhead: When using CTE Recursion, UNION performance can degrade if path and cycle are tracked. Our path tracking was creating massive amounts of duplicate data.

The query execution plan was a masterpiece of algorithmic horror. PostgreSQL was essentially building the entire tree in memory, level by level, before returning any results. For a tree with 1,000 nodes across 10 levels, we were generating tens of thousands of intermediate rows just to return the original 1,000 nodes in the right order.

The Moment of Clarity

After three weeks of query optimization hell—trying different indexes, rewriting the recursion logic, even experimenting with stored procedures—I had an epiphany while debugging why a simple tree with 200 nodes was taking 12 seconds to load.

I was staring at the PostgreSQL execution plan when it hit me: Why am I trying to build the tree in the database?

The database is great at storing relationships, indexing data, and ensuring consistency. But tree construction? That's just data manipulation. And where do we typically do data manipulation in modern web applications?

On the client.

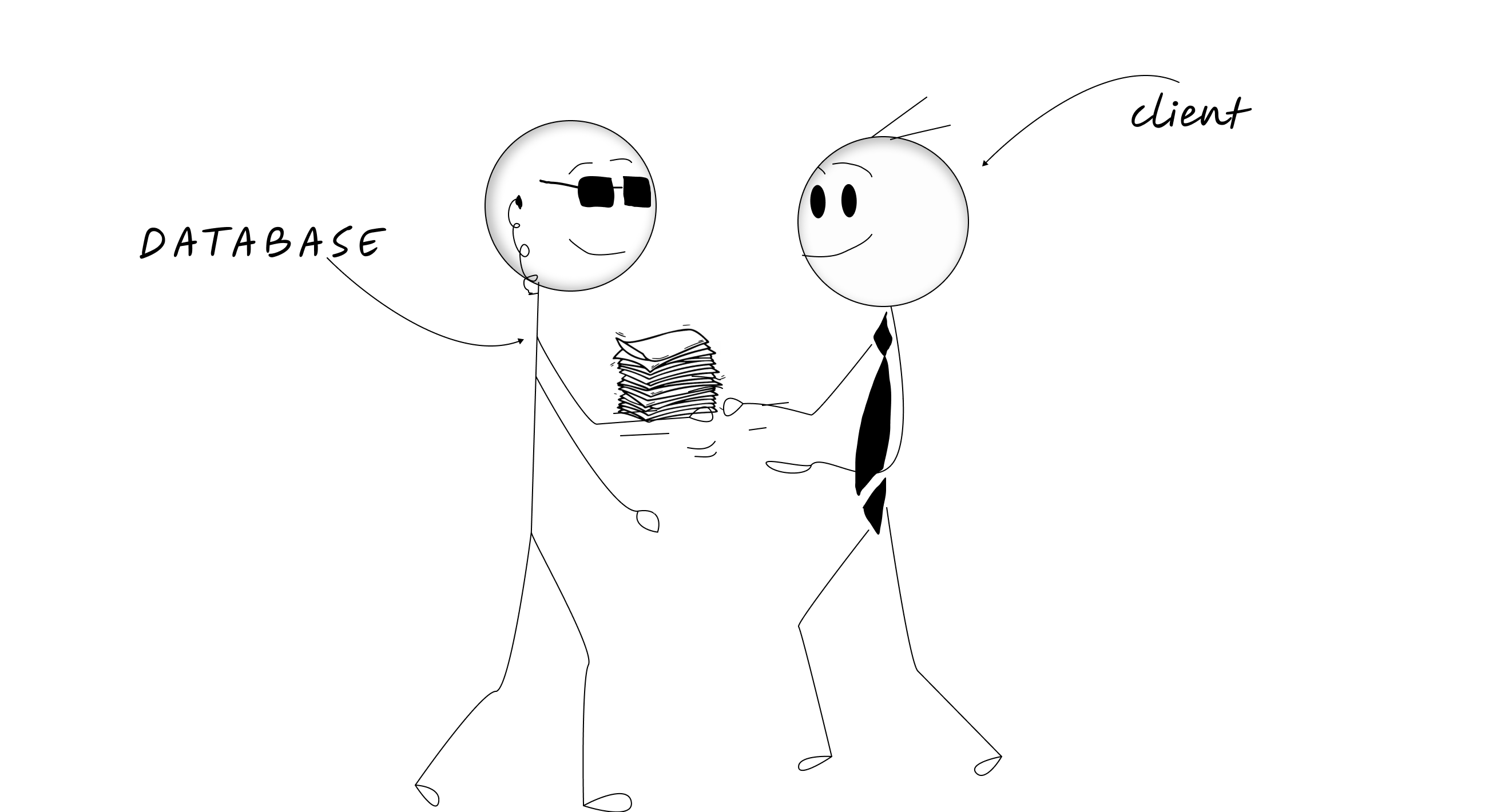

The Elegant Solution: Fetch Flat, Build Locally

The solution was embarrassingly simple: instead of asking PostgreSQL to build our tree, we'd fetch the data flat and construct the tree where we needed it—in our ClojureScript frontend.

Our new query strategy:

-- Simple, fast query: get nodes with their immediate children

SELECT

p.id, p.component_name, p.props, p.parent_id,

array_agg(

json_build_object(

'id', c.id,

'componentName', c.component_name,

'props', c.props,

'parentId', c.parent_id

) ORDER BY nc.index

) as children

FROM vai.node p

LEFT JOIN vai.node_children nc ON nc.node = p.id

LEFT JOIN vai.node c ON c.id = nc.children

WHERE p.workspace_id = $1

GROUP BY p.id, p.component_name, p.props, p.parent_id;

This query is beautifully boring. No recursion, no complex joins, no intermediate table explosions. Just a straightforward GROUP BY with an array aggregation to collect immediate children.

Our EQL query in ClojureScript became:

(def Entity

(f.rc/nc [:vai.node/id :vai.node/orgId :vai.node/appId

:vai.node/rootId :vai.node/parentId

:vai.node/componentName :vai.node/sourceName

:vai.node/props :vai.node/hidden :vai.node/deleted

{:vai.node/children [:vai.node/id :vai.node/orgId :vai.node/appId

:vai.node/rootId :vai.node/parentId

:vai.node/componentName :vai.node/sourceName

:vai.node/props :vai.node/hidden :vai.node/deleted]}]

{:componentName ::entity

:ident (fn [_ props] [:vai.node/id (:vai.node/id props)])

:initial-state (fn [{:keys [children] :as node}]

(assoc node

:vai.node/children

(mapv (partial f.rc/get-initial-state Detail) children)))}))

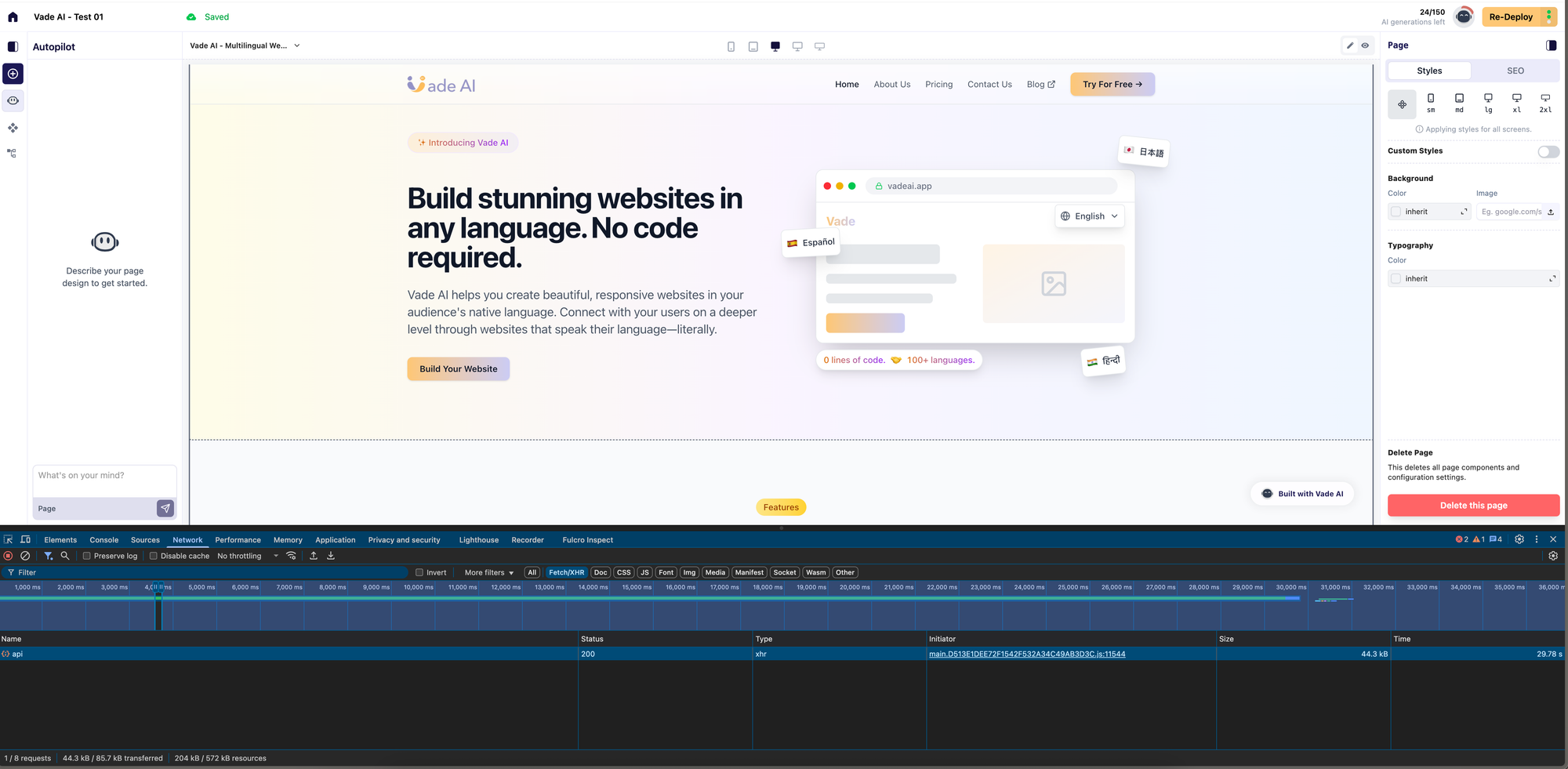

The Performance Turnaround

The results were dramatic:

- Database query time: Dropped from 8-30 seconds to 50-200 milliseconds

- Tree construction time: 10-50 milliseconds on the client

- Total load time: Under 300 milliseconds for even our largest workspaces

- Database load: Reduced by ~90%

- Memory usage: Drastically lower (no more 4MB JSON blobs)

But the performance improvement was just the beginning. This approach unlocked several unexpected benefits:

1. Incremental Loading

Once you have flat data, you can easily implement progressive tree construction. Need just the first two levels? Load those first. User expands a section? Fetch those children on demand.

2. Client-Side Caching

With tree construction happening client-side, we could cache the built trees in memory. Switching between workspaces became instant—no database queries required for previously loaded trees.

3. Better Error Handling

When tree construction fails with bad data, it fails fast on the client with clear error messages. No more mysterious database timeouts when someone creates a malformed node structure.

4. Easier Testing

Testing tree construction logic became trivial. No database setup required—just pass in flat data and verify the tree structure.

The Broader Lesson: Question Your Assumptions

This experience taught me something fundamental about distributed system design: just because you can do something in the database doesn't mean you should.

Performance is more controllable when you're conscious about where computation happens. Databases excel at data storage, indexing, and ensuring consistency. But complex data transformations? Modern JavaScript engines are often faster, more flexible, and definitely more debuggable.

The key insights:

- Recursive queries have exponential complexity characteristics that can bite you as data grows

- Client-side tree construction scales linearly with the number of nodes

- Network transfer of flat data is often faster than complex database computations

- Caching constructed trees client-side provides better user experience than any database optimization

When to Build Trees Where

Not every tree construction should move to the client. Here's my decision framework:

Use database tree construction when:

- Tree depth is limited (< 5 levels)

- Total nodes are small (< 100)

- Tree structure rarely changes

- You need server-side tree operations (aggregations, complex filtering)

Use client-side tree construction when:

- Trees can grow large or deep

- You need interactive tree manipulation

- Tree construction is part of the UI rendering process

- You can benefit from client-side caching

The Implementation Details

For those wanting to implement something similar, here's the practical approach:

1. Design Your Flat Query

Keep it simple. Fetch nodes with their immediate children only:

SELECT node.*, array_agg(children.*) as immediate_children

FROM nodes

LEFT JOIN node_children ON node.id = node_children.parent_id

LEFT JOIN nodes children ON children.id = node_children.child_id

WHERE node.workspace_id = $1

GROUP BY node.id

2. Build Trees Incrementally

Don't construct the entire tree at once. Build from the root down:

(defn build-tree [flat-nodes root-id]

(let [nodes-by-id (index-by :id flat-nodes)

root-node (get nodes-by-id root-id)]

(assoc root-node

:children

(map #(build-tree flat-nodes (:id %))

(:immediate-children root-node)))))

3. Cache Aggressively

Once built, trees should be cached until the underlying data changes:

(defonce tree-cache (atom {}))

(defn get-tree [workspace-id]

(if-let [cached (@tree-cache workspace-id)]

cached

(let [tree (build-tree-from-db workspace-id)]

(swap! tree-cache assoc workspace-id tree)

tree)))

4. Handle Updates Intelligently

When nodes change, invalidate only affected subtrees:

(defn invalidate-subtree [workspace-id node-id]

(swap! tree-cache

update workspace-id

#(remove-subtree % node-id)))

The End Result: System Design Zen

What started as a performance crisis became a lesson in system design philosophy. Sometimes the best solution isn't to optimize the complex thing—it's to make the complex thing simple.

Our current architecture:

- Database: Stores normalized node data with simple relationships

- API: Returns flat data with minimal processing

- Client: Constructs trees as needed, caches aggressively

- User: Experiences sub-second load times on complex interfaces

The recursive CTE approach felt elegant in theory but was a disaster in practice. Our flat-data-plus-client-construction approach feels almost primitive by comparison, but it's fast, debuggable, and scales beautifully.

Sometimes in system design, the most sophisticated solution is the simplest one.

Your Turn: Have You Been Building Trees Wrong?

Before you implement that recursive query for your hierarchical data, ask yourself:

- Do I really need the tree structure in the database?

- Could I fetch flat data and build the tree where I need it?

- Am I optimizing for the wrong part of the system?

The next time you're facing a gnarly tree-building performance problem, remember: sometimes the best way to climb a tree is to bring it down to your level first.

Have you wrestled with recursive queries and lived to tell the tale? Hit me up with your tree construction war stories. I promise to be sympathetic—we've all been there, staring at EXPLAIN ANALYZE output wondering where our life went wrong.