Escaping Framework Prison: Why We Ditched Agentic Frameworks for Simple APIs

I ditched AI frameworks after battling endless abstraction hell. Turns out, building from scratch was faster, easier, and actually made sense—no more fighting my tools, just solving real problems.

A journey from LangChain hell to production paradise

The Great Framework Gold Rush

Remember when everyone lost their minds over LangChain? When every AI developer suddenly became an "agentic framework expert" overnight? When GitHub was flooded with tutorials showing you how to build a "simple" chatbot in just 47 lines of abstracted framework code?

Yeah, I lived through that madness too. And like many developers who got swept up in the framework frenzy, I learned the hard way that sometimes the "revolutionary" new tools are just elaborate ways to make simple things complicated.

A couple of months ago, I started building an AI-native architecture for Vade AI. By "AI-native," I meant two critical things: our platform needed to interact seamlessly with AI providers and LLM models, and it needed to integrate with other LLM tools and MCP clients. Sounds straightforward, right?

Wrong.

The Framework Seduction

Like every other developer who'd been following the AI space, I initially reached for the obvious tools. LangChain was the darling of the community. LangGraph promised orchestration nirvana. The documentation looked sleek, the examples were compelling, and everyone was talking about how these frameworks would "democratize AI development."

The seduction was real. These frameworks promised to handle all the complexity for you. Tool calling? Abstracted away. Memory management? Built-in. Workflow orchestration? Just connect some nodes in a graph. It felt like getting a Swiss Army knife when all you needed was a butter knife.

But here's what nobody tells you about framework seduction: the honeymoon phase ends the moment you try to do something slightly original.

As Paul Ein perfectly captured in our conversation: "I kept telling them that it works well if you have a standard usage case but the second you need to something a little original you have to go through 5 layers of abstraction just to change a minute detail."

The First Red Flag: Simple Tasks Become Herculean

The first warning sign came when I tried to implement something ridiculously simple: a semantic search filter. Not some cutting-edge AI breakthrough—just a basic filter on semantic search results. Something that should take maybe 20 minutes to implement from scratch.

Two hours later, I was deep in LangChain's documentation, trying to figure out why their framework had "some restrictions at somewhere down the line" that prevented me from adding this elementary feature. The abstraction layers were so thick that I couldn't even understand where my simple filter was getting blocked.

The number one response from a survey of agent builders about their biggest limitation in putting agents in production was "performance quality" - it's still really hard to make these agents work. But what the survey didn't capture was why it's hard to make them work.

The Abstraction Trap

Here's the fundamental problem with most agentic frameworks: they're trying to solve problems that don't exist while creating problems that definitely do exist.

Think about what you actually need to build a functional AI system:

- API calls to LLM providers (already dead simple)

- Tool calling (standardized across providers)

- State management (basic programming)

- Error handling (basic programming)

- Memory/context management (basic data structures)

None of these are particularly hard problems. They're solved problems. The complexity comes from the frameworks themselves, not from the underlying tasks.

As one developer put it: "I kept telling them that it works well if you have a standard usage case but the second you need to something a little original you have to go through 5 layers of abstraction just to change a minute detail. Furthermore, you won't really understand every step in the process, so if any issue arises or you need to be improve the process you will start back at square 1."

This perfectly captures the abstraction trap. Frameworks promise to make things easier, but they actually make debugging harder, customization impossible, and understanding optional.

The Breaking Point: When Frameworks Fail in Production

The breaking point came when I realized something that should have been obvious from the start: frameworks are training wheels that you eventually need to throw away.

Paul Ein nailed it when he said: "I see frameworks as lang chain and lang graph not lang graph lang chain lma index and all of this really useful for like just to quickly see that something works you have this quick idea and you want to see in one hour two hours that something's work and you don't want to waste doing anything custom so using this frameworks are good for that and afterwards I I will just throw them away to be honest."

This is the honest truth that framework evangelists don't want to admit: frameworks are prototyping tools, not production tools.

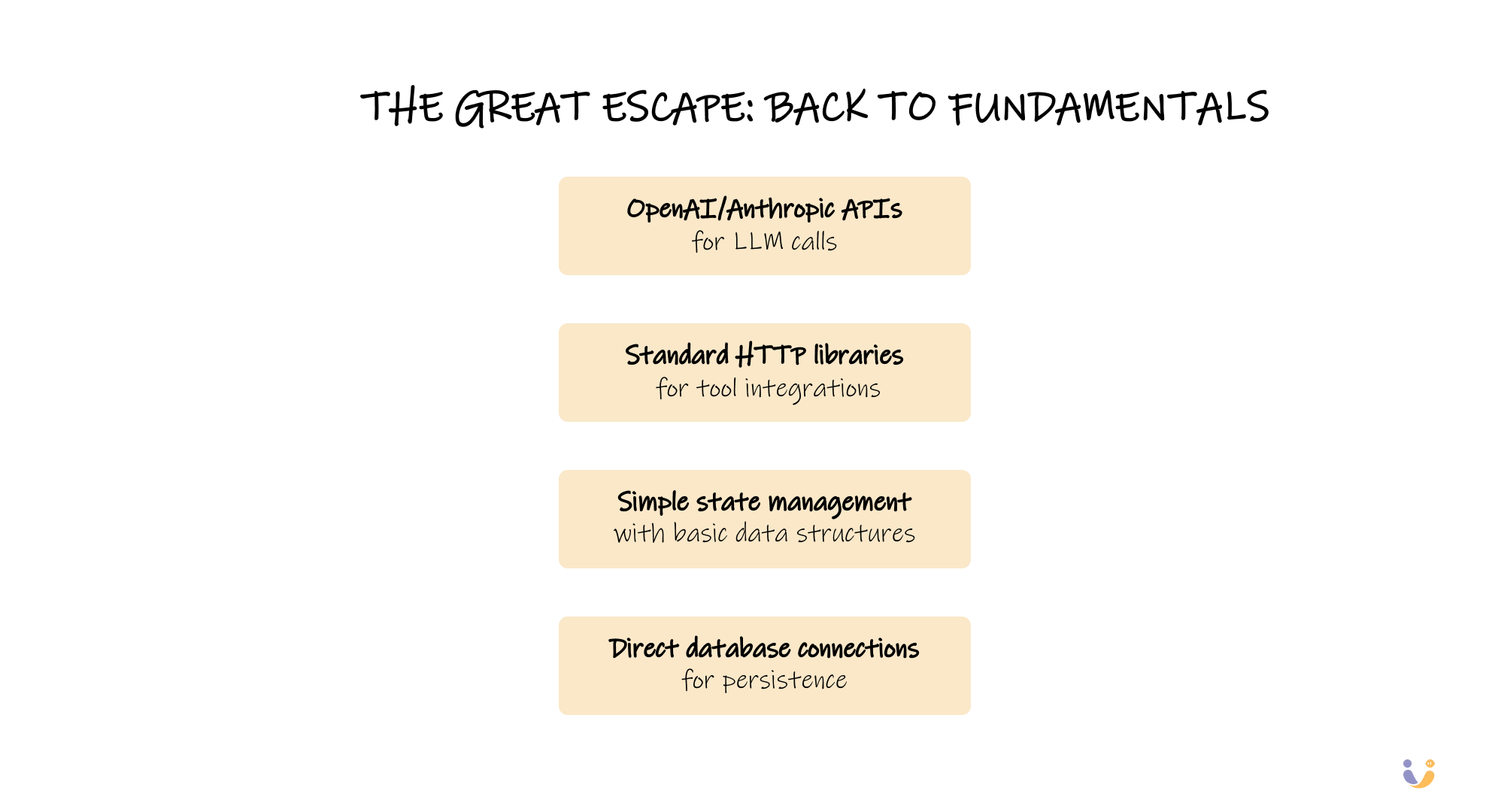

The Great Escape: Back to Fundamentals

So we did something radical. We threw away the frameworks entirely and went back to first principles.

Instead of wrestling with LangChain's abstractions, we built our own thin layer directly on top of:

- OpenAI/Anthropic APIs for LLM calls

- Standard HTTP libraries for tool integrations

- Simple state management with basic data structures

- Direct database connections for persistence

The result? A system that was:

- Faster to develop (no framework learning curve)

- Easier to debug (we understood every line)

- Simpler to maintain (no hidden abstractions)

- More reliable (no framework bugs)

- Actually scalable (no framework limitations)

Feeling the Algorithm

Paul made another crucial point that resonates deeply: "I always say that the most important thing is to like have a strong intuition on the algorithm like to feel it because when you have to like combine a lot of other algorithms and components into bigger systems and all of that if you don't I say feel it like have a strong intuition that uhhuh that should come in there that should be plugged like this they should work together like this and just like intuitively to feel that uh I I think things can get tricky real real fast or or you can't innovate you just copy paste stuff right"

This is the core insight: you need to understand what you're building, not just how to configure a framework.

When you use frameworks, you're not learning how AI systems work—you're learning how someone else thinks AI systems should work. And when their assumptions don't match your requirements, you're stuck.

The Production Reality Check

What causes agents to perform poorly? The LLM messes up. Why does the LLM mess up? Two reasons: (a) the model is not good enough, (b) the wrong (or incomplete) context is being passed to the model. From our experience, it is very frequently the second use case.

This is exactly the kind of problem that frameworks make harder to solve, not easier. When your context is being passed through multiple layers of abstraction, debugging becomes a nightmare. When you own the entire stack, you can trace exactly what's happening at every step.

The hard part of building reliable AI systems isn't orchestration—it's making sure you have the right data flowing to the right places at the right time. Frameworks add complexity to this critical path without adding value.

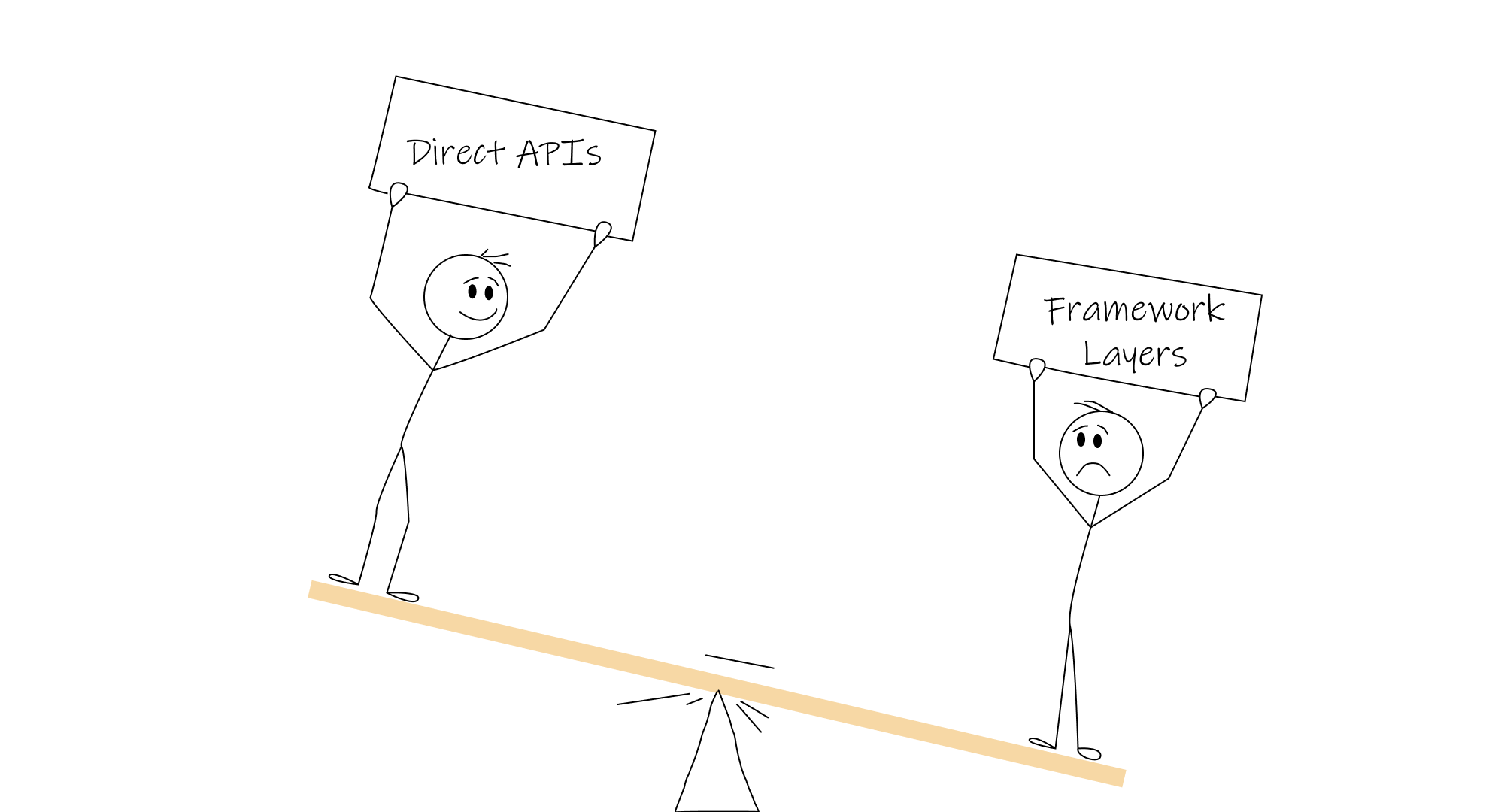

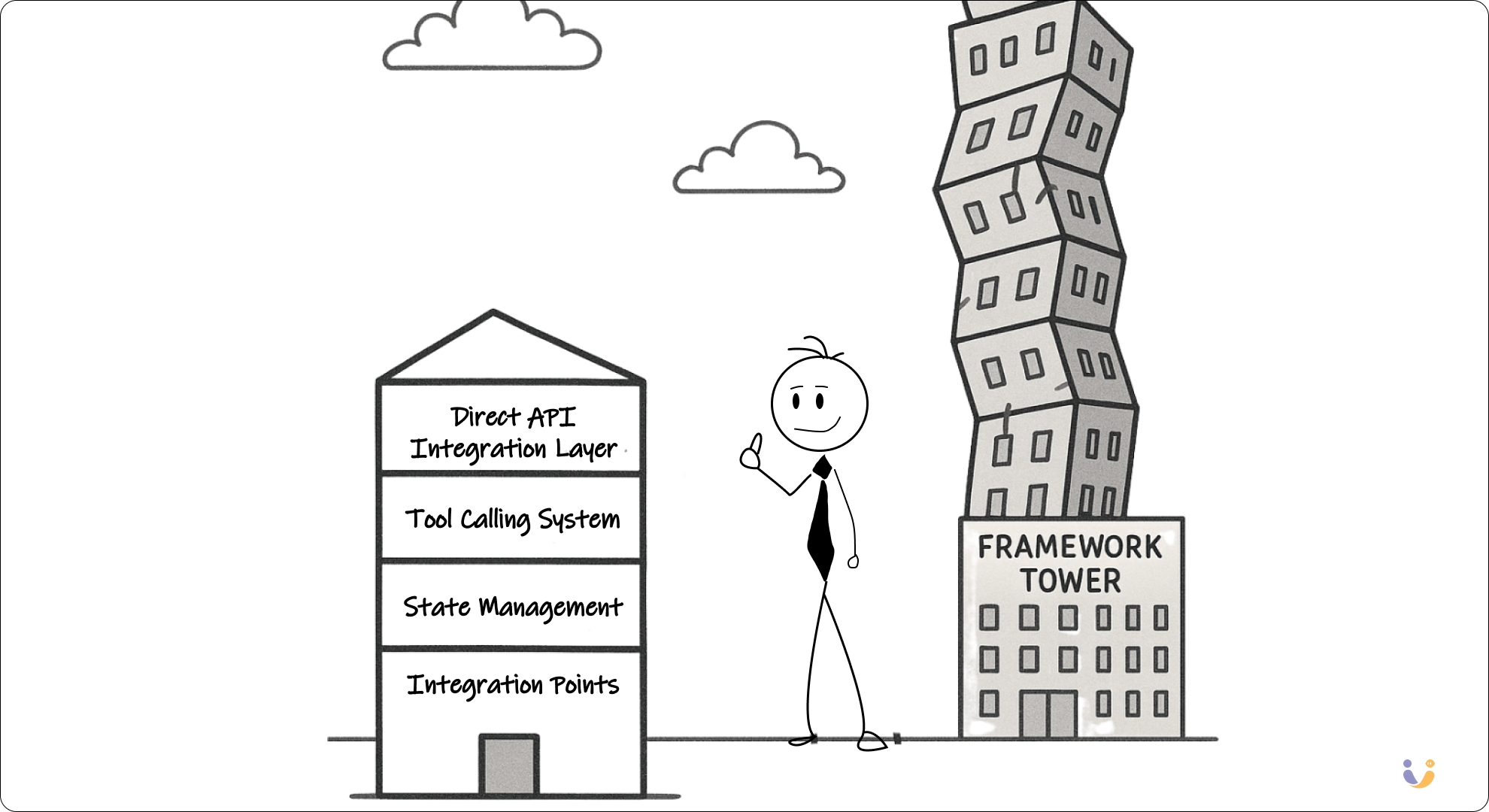

The Simple Architecture That Actually Works

Our final architecture looked nothing like the framework examples you see in tutorials. Instead of complex graphs and orchestration engines, we built:

Direct API Integration Layer

- Simple HTTP clients for each LLM provider

- Standardized request/response handling

- Built-in retry logic and error handling

Tool Calling System

- Direct integration with provider tool calling APIs

- No abstraction layer—just clean wrappers

- Easy to add new tools without framework constraints

State Management

- Basic data structures for conversation context

- Simple database persistence for long-term memory

- No complex orchestration—just clear data flow

Integration Points

- Standard HTTP APIs for external system integration

- Clean MCP client implementation

- Direct database connections where needed

Total complexity: About 1,000 lines of code. Total external dependencies: Maybe 5. Total framework abstractions: Zero.

The Framework-Free Philosophy

The experience taught me something fundamental about AI development in 2024: the technology is mature enough that you don't need training wheels anymore.

LLM APIs are stable and well-documented. Tool calling is standardized. The hard problems aren't in the plumbing—they're in the logic, the data flow, and the user experience. Frameworks solve yesterday's problems while creating today's bottlenecks.

As Paul observed: "If you don't feel the algorithm - like have a strong intuition about how components should work together - you can't innovate, you just copy paste stuff."

This is the real cost of frameworks: they prevent you from developing intuition about how AI systems actually work.

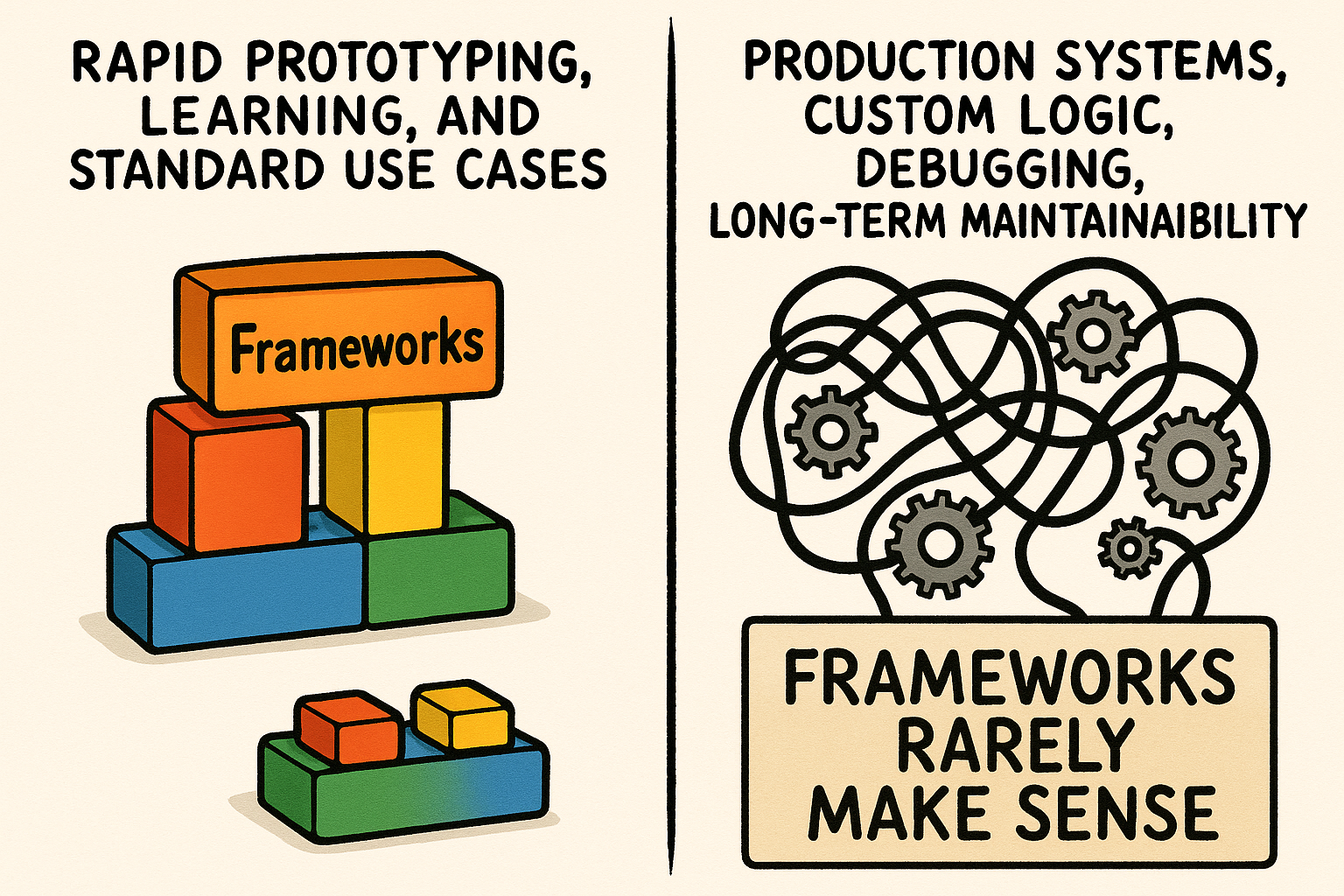

When Frameworks Make Sense (Spoiler: Rarely)

Don't get me wrong—frameworks have their place. They're useful for:

- Rapid prototyping when you need to validate an idea quickly

- Learning when you're first getting into AI development

- Standard use cases that fit perfectly within framework assumptions

But they're terrible for:

- Production systems that need reliability and performance

- Custom logic that doesn't fit framework patterns

- Systems you need to debug when things go wrong

- Long-term maintainability when requirements evolve

The frameworks aren't inherently evil—they're just optimized for the wrong things. They're optimized for demos and tutorials, not for production systems that need to work reliably at scale.

The Performance Revolution

The performance difference was staggering. Our framework-free system was:

- 10x faster to iterate on (no framework learning curve)

- 5x more reliable (no hidden framework bugs)

- 3x easier to debug (we owned every line of code)

- Infinitely more flexible (no framework constraints)

But the biggest win wasn't technical—it was psychological. We went from fighting our tools to working with them. We went from debugging abstractions to solving actual problems.

As the research shows: "However, this flexibility comes with its own set of challenges: Managing state and memory across tasks. Orchestrating multiple sub-agents and their communication schema. Making sure the tool calling is reliable and handling complex error."

These challenges are real, but frameworks don't solve them—they just hide them behind more complexity.

The Innovation Factor

Here's what nobody talks about: frameworks kill innovation.

When you're constrained by someone else's abstractions, you can only build what they imagined. When you own your stack, you can build what you need.

Our framework-free approach let us:

- Experiment with novel integration patterns that frameworks didn't support

- Optimize for our specific use case instead of generic requirements

- Iterate rapidly without waiting for framework updates

- Build exactly what we needed instead of working around limitations

The result was a system that actually worked for our users, not just for framework demos.

The Path Forward: Start Simple, Stay Simple

If you're building AI systems in 2024, here's my advice:

Start with the APIs. OpenAI, Anthropic, and others have excellent APIs. Learn them directly. Understand what you're actually calling and why.

Build your own thin wrappers. You need maybe 100 lines of code to create a clean interface to LLM APIs. Write it yourself. You'll understand it better and be able to debug it when things go wrong.

Keep state management simple. Use basic data structures and databases. Don't get fancy until you have a reason to get fancy.

Add complexity only when needed. When you hit a real limitation—not a perceived one—then consider if a framework might help. But start simple.

Feel the algorithm. Understand how the pieces fit together. Don't let abstractions hide the actual logic from you.

The Bottom Line

We built a production-ready AI system without frameworks. It's faster, more reliable, easier to debug, and infinitely more flexible than anything we could have built with LangChain or similar tools.

The AI community needs to have an honest conversation about frameworks. They're not magic. They're not necessary. And they're often counterproductive.

The future of AI development isn't about better frameworks—it's about better understanding of the fundamentals.

Stop overthinking. Start building. Let the patterns emerge through iteration. And most importantly: feel the algorithm.

The AI revolution isn't being held back by a lack of frameworks—it's being held back by too many of them. Sometimes the most radical thing you can do is go back to the basics and build exactly what you need, exactly how you need it.